What we accomplished

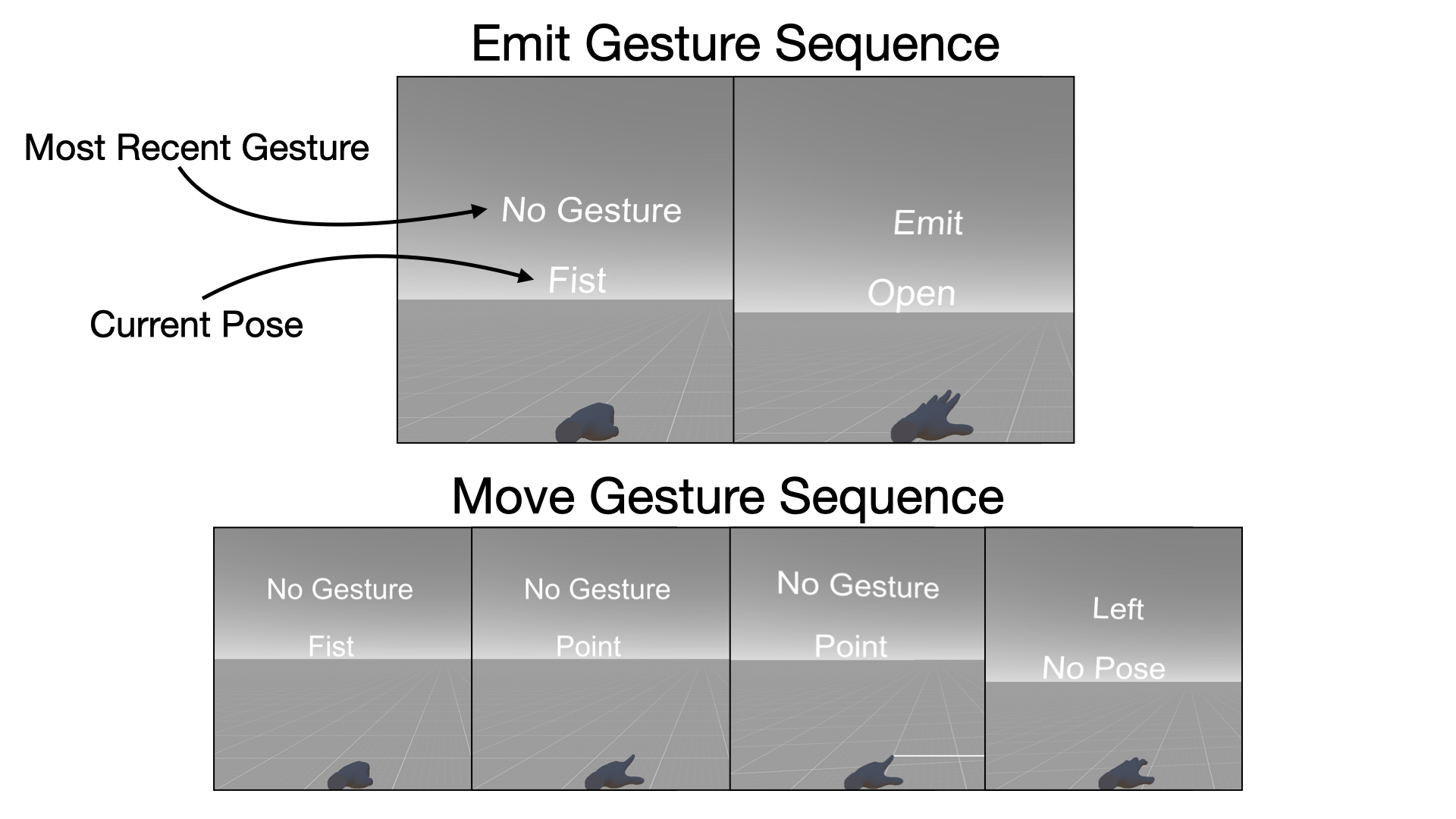

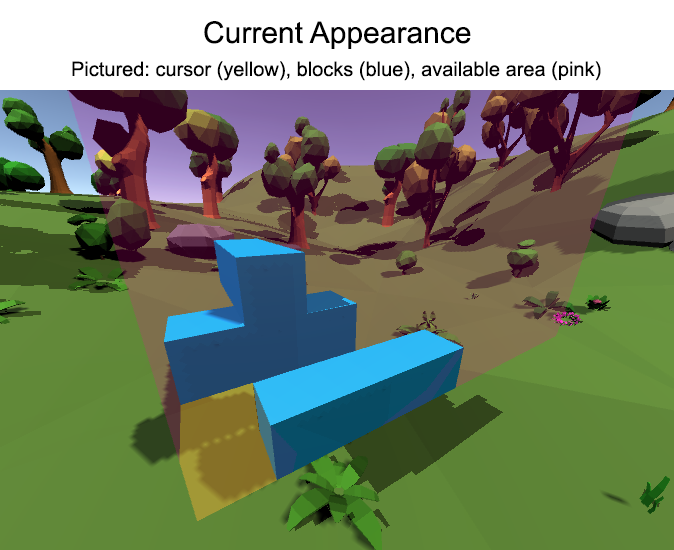

We continued working on the MVP this week. We got gesture data collection working (ie. saving the gesture that one of your hands is making in Unity locally on the quest and then getting that data onto a computer). We also refactored some of the gesture and pose recognition to trigger arbitrary events to make integration with the backend easier. Implemented move and emit gestures using the pose recognition that we implemented last week. Began work on the back end to support more complex functions (ie. looping and modules), but we are putting this work on hold for now in order to finish the MVP.

- Logan: Refactored gesture and pose recognition to make integration with the backend easier and implemented move and emit gestures using pose recognition implemented last week.

- Sea-Eun + Yuma: Began implementation of more complex functions in the backend.

- Erik: Implemented gesture data collection, figured out how to transfer data from program -> quest -> computer.

Plan for next week

We need to finish our MVP by the beginning of next week. We will also be presenting our MVP and video demos so we wil need to spend some time figuring out how to stream our demo to the class. After the MVP, we will continue working on improving hand gestures, if necessary, and we will begin working on the module component of the backend.

Upcoming Challenges

We will be integrating our work that we have been doing independently by the beginning of next week, so it’s possible that this might be harder than we are anticipating. Currently, we are having one person record hand gestures that we are hoping will work for other members of the team, but it’s possible that the hand gesture might be too specific to the person recording it (however, this is why we allocated some time next week to improve the hand gestures if necessary).