What we accomplished

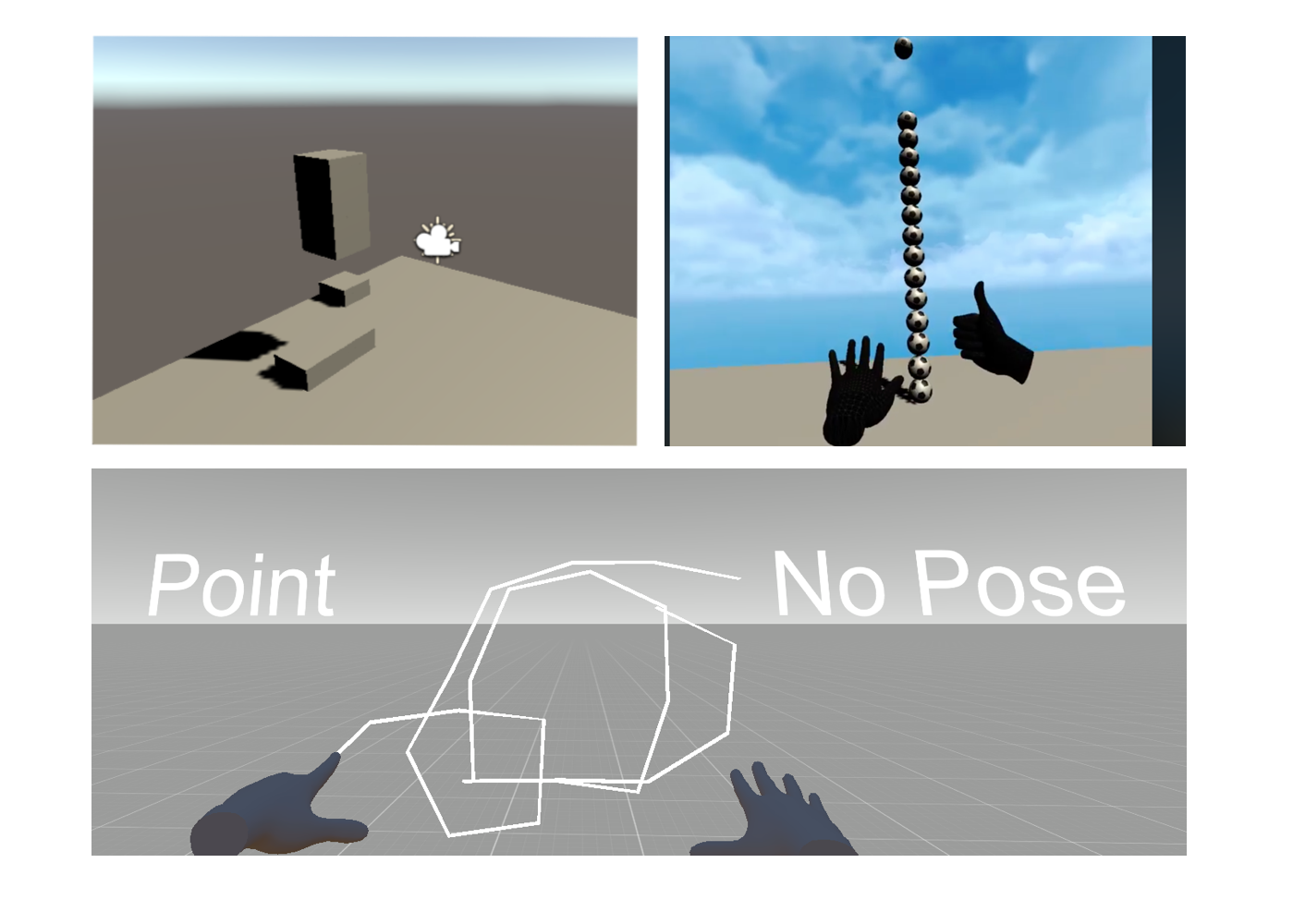

We accomplished our goal of starting to implement hand tracking and the backend required for basic programming concepts. We got hand tracking to work by triggering an action (ie. create an object) when the user makes a specific pose with their hands. The backend supports block movement, emitting, and deletion through keyboard actions.

- Logan: Researched and set up pose recognition for emit and block movement. Set up an emulated VR scene that allows us to develop features for hand tracking without building to the device every time, as this was a pain point for us.

- Sea-Eun + Yuma: Pair programmed and began implementation on the backend Unity environment where users will be programming. Wrote initial code to emit, move, and delete blocks.

- Erik: Researched and set up pose recognition for an initial looping attempt.

Plan for next week

We would like to have most of our MVP finished by the end of next week. This involves polishing up the backend and finalizing on the specific poses we are using for hand recognition. We also need to extend pose recognition to detect movement as well (ie. user make a pose with their index finger and then moves their hand in a certain direction to denote which direction they want the block to move in). We are aiming to merge all components together into a single repository to combine the efforts that we did this week. We also need to make some final design decisions about what the environment will look like for the MVP (ie. where the blocks will be constrained to, theme, etc.)

- Logan + Erik: Finish pose and gesture recognition for block movement, emit, looping, and defining modules.

- Sea-Eun + Yuma: Finish backend that supports block movement, emit, looping, and defining modules.